In this series we’ll look at Machine Learning by concentrating on the MENACE algorithm.

MENACE

The Matchbox Educable Noughts and Crosses Engine or MENACE was designed and built in 1961 by Donald Michie. Michie was a researcher in the field of Artificial Intelligence and during WWII worked alongside Alan Turing, Max Newman, and Jack Good at Bletchley Park.

Physical Composition

At the time of its creation Michie did not have sufficient access to a computer so he designed his algorithm around matchboxes. Each matchbox represents a state of a noughts and crosses (Tic-Tac-Toe) game. Due to symmetries in the game states the amount of matchboxes was reduced to 304. For example, a game state with a single X in any corner can be represented by a single matchbox as the grid can be rotated to match the depiction on the box.

At the start, each matchbox contains a number of beads corresponding the free spaces in that particular game state. As such, in the initial state of an empty grid the box contains 9 beads, all boxes with a single occupied square contain 8 and so on. These beads are coloured to represent a particular grid position and the same colour code is used for all boxes.

Playing the Game

The algorithm is designed such that MENACE always has the first turn. This biases the chance of it winning or drawing to give it time to “learn” how to play.

A random bead is taken from the initial blank grid box. This is MENACE’s move and this position is marked on the game grid corresponding to the colour of the bead. The game state and bead/position played is recorded. The human player then takes their turn.

MENACE’s next turn is chosen by selecting the matchbox matching the current game state which now includes the human player’s move. The grid may have to be rotated to match game state. Once the correct matchbox is found a random bead is removed from it and play continues as per the first move.

When a game is complete MENACE is “rewarded” or “punished” depending on the outcome. The rules for this are defined as follows:

- MENACE Wins: For each move MENACE made those beads are returned to their respective boxes plus 3 more beads of the same colour.

- MENACE Draws: For each move MENACE made those beads are returned to their respective boxes plus another bead of the same colour.

- MENACE Loses: The beads which MENACE played are not returned.

From these simple rules winning strategies are “rewarded” by adding beads and therefore increasing the change that these beads/moves are chosen for that game state in future. A draw results in a minimal increase in probability and a lose a reduction in probability.

Results

Noughts and Crosses between two humans familiar with the game usually results in a draw. This is because there is an optimal strategy which always blocks the opponent from completing three-in-a-row.

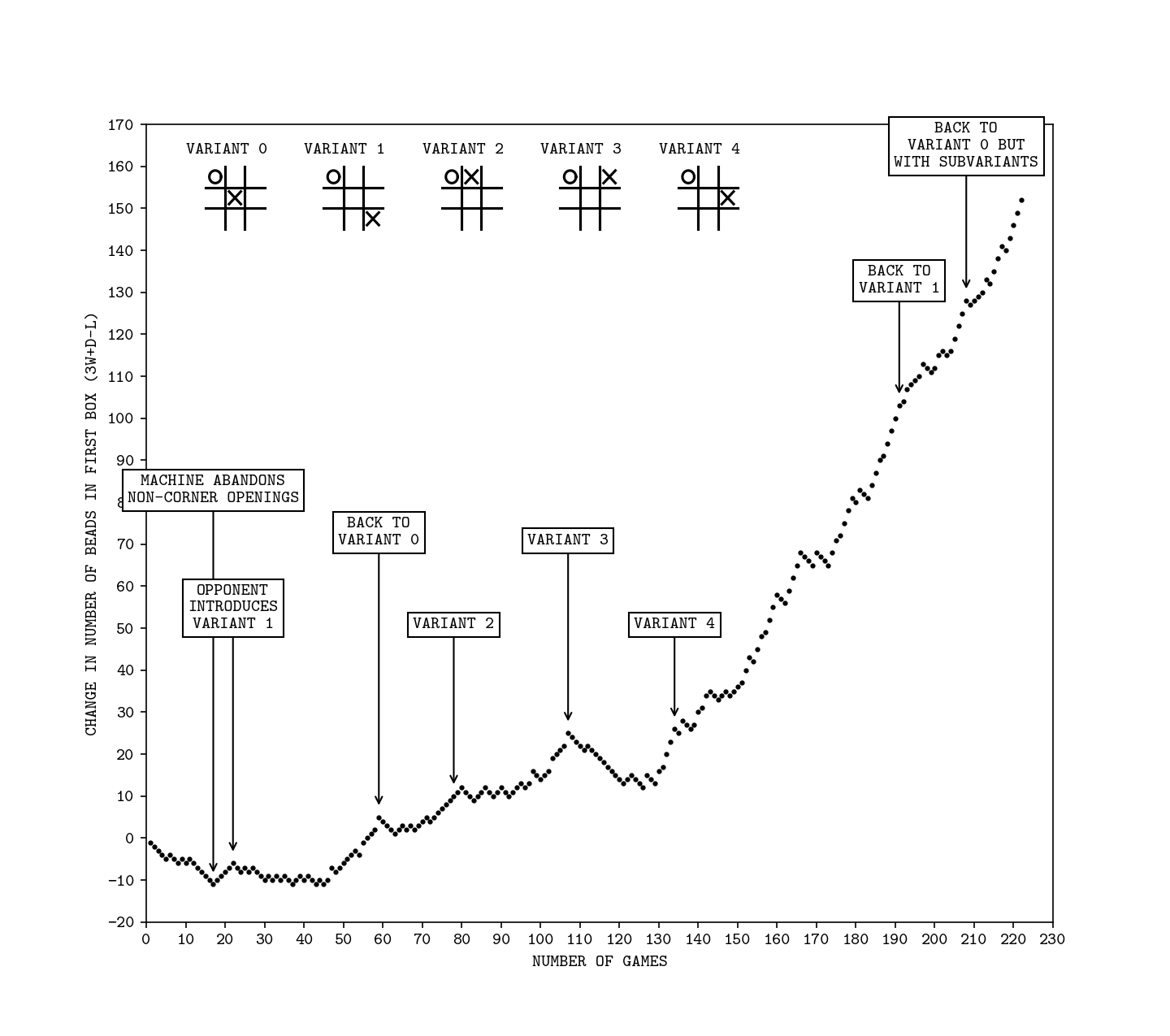

In practice Michie found that MENACE would always produce a positive trend after an initial amount of games where it began to tune its choices. This initial amount varies depending on the strategy of the opposing human.

Credit: Matthew Scroggs

Coming next

In the next article we’ll look at how to implement the MENACE algorithm in software and see if we can replicate Michie’s findings. We’ll then see if there are any problems with the algorithm and if we can make any improvements.